Artificial intelligence is evolving rapidly, bringing new ways to enhance its efficiency and connectivity with real-time data. One of the latest advancements is the Model Context Protocol (MCP), an open standard that allows AI models to access files, APIs, and tools directly—without the need for intermediate processes such as embeddings or vector searches. In this article, we’ll explore what an MCP server is, how it works, and why it could transform the future of AI.

What is an MCP Server?

An MCP server is a key component within the Model Context Protocol. It acts as a bridge between an AI model and various data sources, enabling real-time query and retrieval of information.

Unlike Retrieval-Augmented Generation (RAG) systems, which require generating embeddings and storing documents in vector databases, an MCP server accesses data directly without prior indexing. This means the information is not only more precise and up-to-date but also integrates with lower computational overhead and without compromising security.

How Does MCP Work?

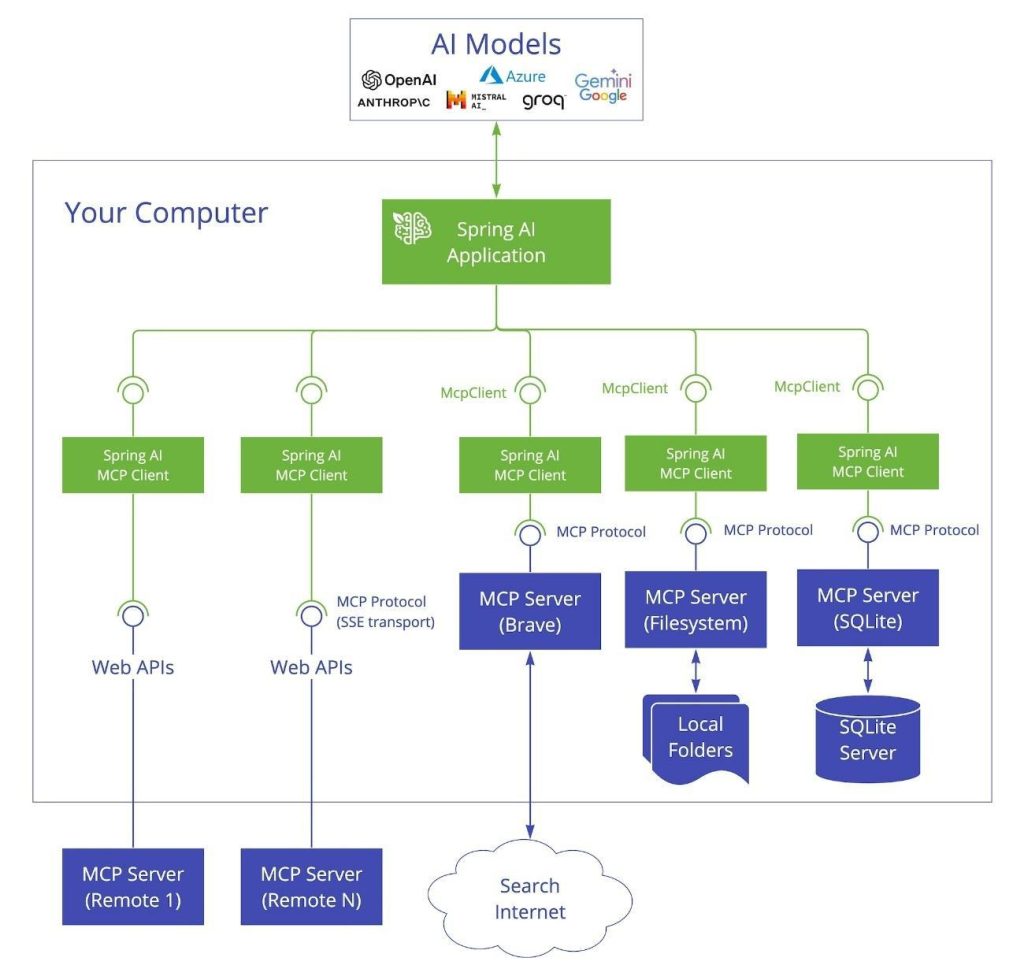

The Model Context Protocol enables AI to connect with data sources via an MCP server, which facilitates communication between the model and storage systems. It operates through the following components:

- MCP Hosts: Applications that request information from an MCP server (e.g., AI assistants like Claude or ChatGPT).

- MCP Clients: Protocols that manage communication between the host and the MCP server.

- MCP Servers: Programs responsible for exposing functionalities that allow access to files, databases, and APIs.

- Data Sources: Local or cloud-based systems from which real-time information is retrieved.

When a user submits a query, the AI assistant connects to an MCP server, which retrieves the appropriate data source and returns the information without additional processing.

Key Benefits of MCP

Implementing MCP in AI systems provides significant advantages over other data retrieval architectures like RAG. Some of its benefits include:

1. Real-Time Access 📅

With MCP, AI models can query databases and APIs in real-time, eliminating outdated responses or reliance on re-indexing processes.

2. Enhanced Security and Control 🔒

Since MCP does not require intermediate data storage, it reduces the risk of data leaks and ensures that sensitive information remains within the enterprise or user environment.

3. Lower Computational Load ⚡

RAG systems rely on embeddings and vector searches, which consume significant computational resources. MCP removes this need, leading to lower costs and higher efficiency.

4. Flexibility and Scalability 🔗

MCP allows any AI model to connect with different systems without requiring structural changes, making it ideal for companies that operate across multiple platforms and databases.

MCP vs. Traditional Integration: A Smarter Connection

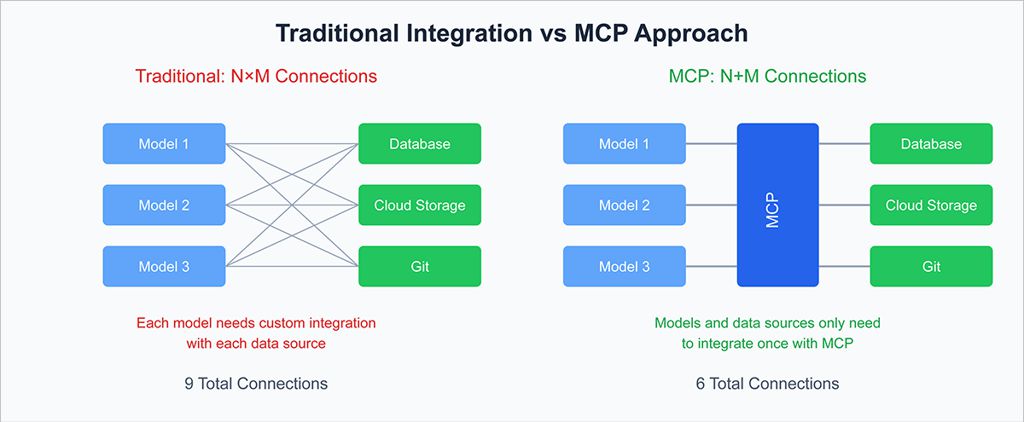

To better understand MCP’s advantage, let’s examine the following diagram:

Comparison between traditional integration and MCP.

The image compares two approaches to AI integration with data sources:

Traditional Integration

- Each AI model must manually integrate with each data source.

- This results in an N × M connection complexity, meaning that as more models and data sources are added, complexity grows exponentially.

- Developers must write custom integration code every time a new data source is added, consuming time and resources.

MCP Approach

- MCP acts as an intermediary between AI models and data sources, reducing the number of required integrations.

- Instead of N × M complexity, the MCP protocol reduces connections to N + M, greatly simplifying the architecture.

- This means developers can connect new tools without writing repetitive code, allowing them to focus on building better features instead of dealing with integrations.

Thus, MCP not only simplifies connectivity but also enhances scalability and security by minimizing friction points in AI model integration with real-time data.

How to Get Started with MCP?

For those interested in implementing MCP, the first step is setting up an MCP server and connecting it to relevant data sources. Various SDKs and tools are available to facilitate integration with languages such as Python, Java, and TypeScript.

Additionally, developers can explore pre-built solutions and documentation at modelcontextprotocol.io to learn more about its applications and benefits.

Conclusion

The Model Context Protocol (MCP) represents a significant shift in how AI models interact with real-time data. By eliminating the need for intermediate processes like embeddings and vector databases, MCP offers a more efficient, secure, and scalable solution.

If the future of AI lies in its ability to adapt and provide accurate, real-time information, then MCP could become the new standard for AI model connectivity.

At hiberus, we are ready to help you implement AI in your organization. Our expertise in generative AI allows us to design personalized solutions that drive your business toward the future.

Contact us to discover how AI can revolutionize your business!

Contact with our GenIA teamWant to learn more about Artificial Intelligence for your company?